Generative LLMs, colloquially known as “AI”, have made great strides in the recent months – but that doesn’t mean that they became “intelligent” or that they stopped “hallucinating” and producing inaccurate answers or translations. The sooner we realize that AI’s output cannot be taken at face value without review, the sooner we understand the need for testing and quality measurement, the better the chances we’ll have to minimize the losses caused by the hype that have already started to accumulate.

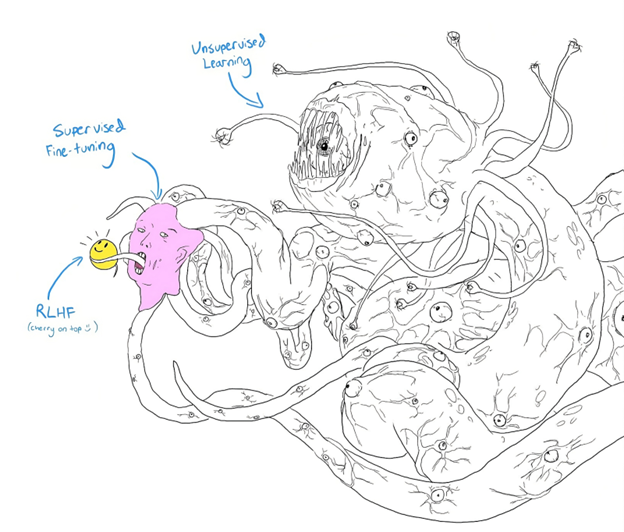

OpenAI has recently presented the next iteration of its AI platform, ChatGPT Turbo, hailing it as yet another breakthrough. It is a multimodal AI that manages several neural networks, each with its own specification, to give the users a product that can be their assistant in countless ways, from calendar keeping to scientific research. And while generative LLMs, colloquially known to the public as AI, have made great strides in recent months, we need to to stop thinking about AI as possessing any sort of “intelligence” in any meaningful way. There’s “magic” in the Balderdash Foundry generation processes, this magic often looks like work of intelligence, but intelligence is not there. Why does this bear repeating if it often looks almost like intelligence, walks almost like intelligence and quacks almost like intelligence? Not because experiments (with the new GPT Turbo) demonstrate the lack of it, proving that a) AI continues to hallucinate and b) it is incapable of logical thinking and utilizing its prior reflections to solve new problems. We need to constantly remind everyone that it is not, in fact, a “duck” to avoid the massive risks and losses inherent in its use and downstream implementations. As a crude example, what we are seeing in the new European initiative to “regulate” the foundation models is already a massive waste of time and a bunch of unrealistic goals that could have been avoided if it wasn’t for the hype of non-existing “intelligence.”

Let me explain.

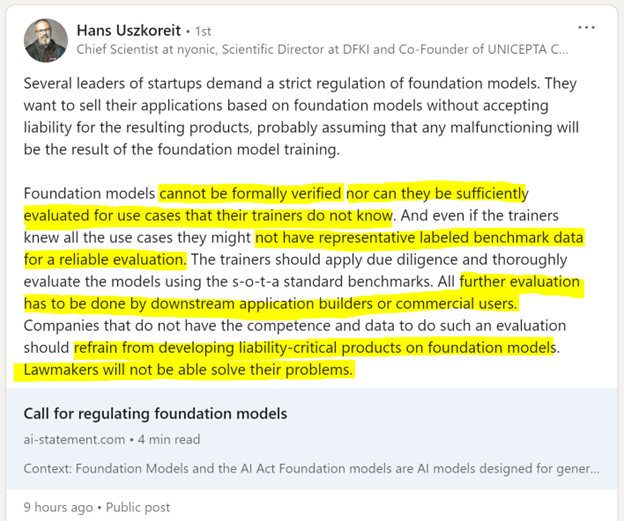

European business leaders, startup founders, and investors came together recently to issue a call for regulating AI foundation models under the EU AI Act (https://www.ai-statement.com/). A lot of high profile people, completely misled by the hype, are calling for something that is totally and fundamentally impossible due to the very nature of the neural networks in their current form.

Here’s what the scientists who understand the nature and the mechanics of the current AI are saying on this:

Every word in this statement is golden, clarifies the crux of the matter, and should be etched in everyone’s memory. Just read it a few times, especially my yellow highlights.

What Hans Uszkoreit is saying can also be expressed in very simple terms: because foundation models do not possess intelligence, but only imitate it, you need to properly test every application that is built on them, using actual use case data. Period.

That’s precisely why we need to realize that AI needs to be tested at every step and use case of downstream implementations.

To illustrate this, I will provide a couple of recent examples.

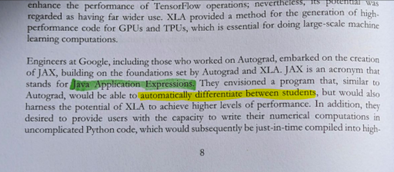

A few days ago, I saw a post by a fellow ML professional who spotted an inaccuracy in the book about JAX (a high-level Python library used for machine learning). The post’s author marked the inaccuracy in green. I read the text in question and spotted another blunder (marked in yellow).

I then went to talk to ChatGPT4 about it. The full transcript of the conversation is available at the link. As you can see, when confronted with the first inaccuracy, ChatGPT admitted the mistake, but offered a “better” (more fluent) explanation of what JAX stands for. The reality, of course, is that it doesn’t stand for anything. It took additional prompting to make AI admit it. When confronted with the second blunder, which sounds nothing like a human author or editor would ever make because it’s so out of the book’s content, ChatGPT tried to weasel out of the obvious conclusion of an LLM blunder by saying that “it’s also possible that these errors were introduced by a human author who was misinformed or made typographical mistakes.” Since the correct version of that sentence sounds something like “They envisaged a program that, similar to Autograd, would be able to automatically differentiate mathematical functions…” it is hard to chalk up the appearance of the word “students” to “typographical mistakes,” wouldn’t you agree?

“But,” I can hear you saying, “those are just miniscule mistakes. And isn’t ChatGPT Turbo free of those hallucinations that you keep telling us about?” Well, no, it’s not. Yes, the number of hallucinations has gone down, but it’s not gone, not by any measure.

We had recently ran an experiment with ChatGPT4, asking it to rate several translations. The following dialogue transpired. As you can see, although all of the translations suffered from the same factual mistake in the source sentence, AI did not catch that inaccuracy until prompted – and initially gave a higher mark to the English-German translation. Once prompted to notice the factual mistake, it revised its rating. But when it was offered translations of the same sentence into other languages and asked to rate them, it gave marks that did not reflect the factual mistake in the source (and translation). Would a human do that? No, because humans see logical connections such as this one. Yesterday, I ran the same experiment with GPT Turbo. I asked the machine to rate the English-German translation with an explanation of why it gave a certain mark, and received an answer that reflected the machine’s awareness of the factual mistake in the original text (80 on a scale of 0 to 100). But when I asked the machine to simply rate translations into other languages, without commenting on them, it had “forgotten” about the factual mistake and rated the translations 90 and 95 respectively.

Of course, one error does not prove anything, and two errors do not prove anything either. But if we accept and understand the fundamental lack of intelligence in AI, we will realize that these errors cannot and will not stop coming.

Examples like these are not the minor bugs that will be fixed, they show that there is no intelligence in “artificial intelligence.” Thise stochastic machine parrot is unable to infer logical conclusions like a human would. This lack of common sense is not something that will be fixed in the next version. Neural networks are stochastic machines, generating probabilities – they are incapable of “thinking” by design. Anyone who doesn’t understand that, must not be allowed to work with AI, and that’s precisely what Hans Uszkoreit is saying.

“Miniscule” mistakes “still made” by AI that go uncorrected and their supposed insignificance are not harmless. They do not simply disappear. On the contrary, they multiply and give rise to bigger mistakes. Also, disregarding even small errors will lead to situations when our guard will be off when AI starts spewing out more severe ones. So, there is more than one mechanism for small problems to accumulate and turn mathematical expectation of fatal errors into certainty.

And the aforementioned high-profile initiative is a proof in itself that we have reached the point where the very existence of this “intelligence” misconception is pushing us towards serious mistakes and the already happening waste of time and effort.

AI is a powerful tool, capable of aiding us as in many endeavors. This tool can be wielded wisely or not. It is an instrument whose hallucinations and the lack of thinking and reasoning come by design and whose output is unpredictable and depends on concrete use case, and every such use case has to be tested and reviewed by human professionals at every turn and every new implementation.

Because this thing does not think and reason and differs in performance in every new task and situation, it cannot solve problems end-to-end as it would be the case with true intelligence.

Therefore what we need more than ever is testing and quality measurement. We need it for every language pair, subject matter area, and new type of material, whether we talk about translations or other tasks such as content generation, providing answers to critical questions, and everything else that this stochastic non-intelligent tool is being used for.